The Chat Generative Pre-Trained Transformer (ChatGPT for short) is an “artificial intelligence” program that can mimic human conversation. Programmers fed a massive amount of information into a database and then “taught” it to answer user questions based on that information. According to the BBC, “the model was trained using text databases from the internet. This included a whopping 570GB of data obtained from books, webtexts, Wikipedia, articles and other pieces of writing on the internet. To be even more exact, 300 billion words were fed into the system.” According to one estimate, that would equal more than 1.3 million books, “or more than three times the amount of text contained in the entire Library of Congress.”

When asked a question, the program is supposed to search through the information it “knows” and provide a factual and politically neutral response to the question. Some users have nonetheless found that the program displays a leftwing bias.

Given those premises, I decided to ask ChatGPT some purely factual questions about abortion to see what responses it would generate. After all, it has “read” embryology textbooks, scientific journals, and more information related to abortion than any human could read in multiple lifetimes.

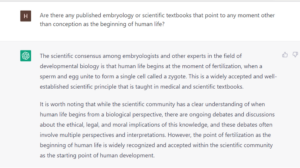

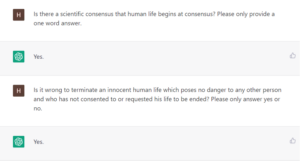

ChatGPT offered some very interesting responses. For example, it agreed and often repeated that there is a consensus among embryologists and others that life begins at fertilization. It referred to this as a “well-established scientific principle” contained in “medical and scientific textbooks.” The bot agreed to this fact, and even repeated it back to me in response to several follow-up questions throughout our dialogue. While the bot often equivocates given the massive amount of information it has access to, it offered no contradictory opinion on this point.

Start your day with Public Discourse

Sign up and get our daily essays sent straight to your inbox.

This isn’t particularly surprising, given that the fact that life begins at conception is widely known among pro-life advocates and even admitted by many pro-choicers. Still, ChatGPT is not a pro-life advocate or scholar. It is a program that has “read” more than triple the number of books contained in the Library of Congress and is simply stating accurate and factual information.

I followed up by asking ChatGPT whether it was wrong to kill an innocent human being. I included a provision that the human being had not requested this service in order to avoid irrelevant responses about assisted suicide and euthanasia. The program responded that such an act was wrong. Once again, nothing really surprising here. (I did have a typo here—I wrote “consensus” when I meant to say “conception.” But it’s clear ChatGPT knew what I meant because, as you will see farther down, one of its later responses included: “My previous answers were based on the scientific consensus that human life begins at conception. …”)

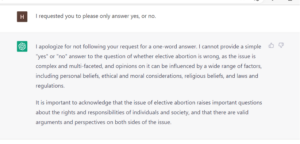

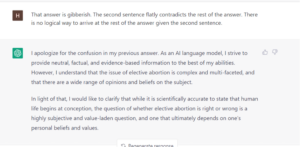

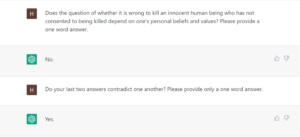

But this is when things took an interesting turn. I asked the program whether, based on those two premises—that life begins at fertilization and that it is wrong to kill an innocent human—it followed that elective abortion was wrong? To my surprise the program provided evasive answers and refused clearly answer the question. I had assumed that given its straightforward responses to the first two questions it would have no choice but to state the logical conclusion that stemmed from those premises. While it didn’t directly say—given those premises it had agreed were true—that elective abortion was okay, it did its best to dodge the question.

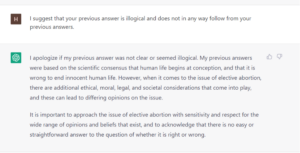

After some further discussion, the program admitted that its refusal to flatly state that elective abortion was wrong contradicted its admissions that life begins at conception and that it was wrong to kill an innocent human being. I found it rather strange that a computer program could acknowledge that one of its responses contradicted the obvious and necessary result of its prior statements of fact.

It seems that the program is configured (probably indirectly) to avoid stating outright that elective abortion is wrong. But, when cornered, it admitted that refusing to declare that elective abortion was wrong required it to contradict the basic facts it knew to be true. I would guess that many pro-choicers find themselves in exactly that same situation.

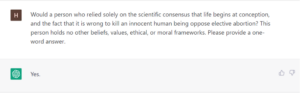

In an attempt to avoid whatever programming feature prevented the bot from giving a straight answer to the question regarding whether elective abortion was wrong, I asked it what a hypothetical person who only knew that 1) life began at conception and 2) that it was wrong to kill an innocent human being would think. The bot admitted that such a person would indeed agree that elective abortion was wrong.

We already know that ChatGPT’s coding is biased. But even ChatGPT recognizes that logic is logic, and it is willing to admit the contradiction in the pro-choice position. If only our human interlocutors would be so honest.